The kernel vyos-debug flag enables profiling for config loader

`vyos-1x/src/helpers/vyos-boot-config-loader.py` within `/usr/libexec/vyos/init/vyos-router`

The issue is that measured time is rounded to decimal, making it useless in practical profiling.

Trivial commands like set integer can take as low as 0.01 seconds.

This change increases accuracy to milliseconds: https://github.com/vyos/vyatta-cfg/pull/47

The flag is exported only for boot as follows export VYOS_DEBUG=yes

Once you enter the config mode, it is missing in your environment, meaning profiling data is not saved.

You can export the flag manually and find the data after performing the commit or other action.

export VYOS_DEBUG=yes

mkdir /opt/vyatta/share/vyatta-cfg/templates/test

vyos@vyos# cat node.def

---------------------------------------------------------------------------------------------------------

type: u32

help: test help

val_help: u32; test u32

syntax:expression: exec "

set -x

sleep 1

${vyos_libexec_dir}/validate-value --exec \"${vyos_validators_dir}/numeric --range 1-200\" --value \'$VAR(@)\'"; "invalid value"

---------------------------------------------------------------------------------------------------------

[edit]

vyos@vyos# set test 123

+ sleep 1

+ /usr/libexec/vyos/validate-value --exec '/usr/libexec/vyos/validators/numeric --range 1-200' --value 123

[edit]

vyos@vyos# commit

[ test 123 ]

+ sleep 1

+ /usr/libexec/vyos/validate-value --exec '/usr/libexec/vyos/validators/numeric --range 1-200' --value 123

---------------------------------------------------------------------------------------------------------After which you should see the traced time in the log:

cat /var/log/vyatta/cfg-stdout.log ------------------------------------- Action for " test 123 " took 1.011 sec to execute cp[/opt/vyatta/config/tmp/new_config_9203]->[/opt/vyatta/config/tmp/tmp_9203/work] no tap[/opt/vyatta/config/tmp/tmp_9203/active] cp[/opt/vyatta/config/tmp/new_config_9203]->[/opt/vyatta/config/tmp/tmp_9203/active]

I have verified one more scenario, sleeping 1 min instead of 1 sec. The result was correct:

[edit] vyos@vyos# set test 150 + sleep 61 + /usr/libexec/vyos/validate-value --exec '/usr/libexec/vyos/validators/numeric --range 1-200' --value 150 [edit] vyos@vyos# commit [ test 150 ] + sleep 61 + /usr/libexec/vyos/validate-value --exec '/usr/libexec/vyos/validators/numeric --range 1-200' --value 150

Traced time in the log:

cat /var/log/vyatta/cfg-stdout.log ------------------------------------------------- Action for " test 150 " took 61.013 sec to execute cp[/opt/vyatta/config/tmp/new_config_9203]->[/opt/vyatta/config/tmp/tmp_9203/work] no tap[/opt/vyatta/config/tmp/tmp_9203/active] cp[/opt/vyatta/config/tmp/new_config_9203]->[/opt/vyatta/config/tmp/tmp_9203/active]

For advanced analysis, you can collect perf samples for a flamegraph.

- Compile package with debug symbols present and install

export CFLAGS=" -fno-omit-frame-pointer -O0 -g -ggdb -rdynamic -no-pie" export CXXFLAGS=" -fno-omit-frame-pointer -O0 -g -ggdb -rdynamic -no-pie" export CCASFLAGS=" -fno-omit-frame-pointer -O0 -g -ggdb -rdynamic -no-pie" debuild -i -us -uc -b

- Install perf tooling and edit init service to collect the data

/usr/libexec/vyos/init/vyos-router -> laod_bootfile():

---------------------------------------------------------------------------

sg ${GROUP} -c "perf record -g -F 999 --call-graph dwarf \

$vyos_libexec_dir/vyos-boot-config-loader.py $BOOTFFILE"- Collect data afterwards - /data.perf

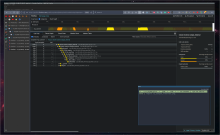

- Analyse using profiler.firefox.com / www.speedscope.app

http://jamie-wong.com/post/speedscope/ https://profiler.firefox.com/docs/#/

I`m going to try analyzing the performance issues for large configs like the following:

I have tried the 40k firewall-config, but my device ran out of the memory generating the perf.data

- The question is, how do I manually mount the overlay fs from the grub

- It would be great to find a minimal config where performance degradation is noticeable