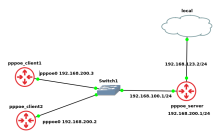

Test scheme (ref. from https://forum.vyos.io/t/flow-accounting-not-working-on-rolling-release/4438)

eth0 - Uplink address, assigned via pppoe.

eth1 - pppoe-server for internal clients

We don't see flow counters for ppp interfaces.

Technology nat is not used.

sever@prim# show interfaces

ethernet eth0 {

description Uplink-pppoe-client

duplex auto

pppoe 0 {

default-route auto

mtu 1492

name-server auto

password primpass

user-id primlogin

}

smp-affinity auto

speed auto

}

ethernet eth1 {

description PPPoE-server

duplex auto

smp-affinity auto

speed auto

}Configuration pppoe-server

service {

pppoe-server {

access-concentrator ACNPRIM

authentication {

local-users {

username secondlogin {

password secondpass

}

}

mode local

}

client-ip-pool {

start xxx.xxx.242.245

stop xxx.xxx.242.245

}

dns-servers {

server-1 1.1.1.1

server-2 8.8.8.8

}

interface eth1

local-ip xxx.xxx.242.244

}Flow accounting

sever@prim# show system flow-accounting

interface eth0

interface eth1

interface pppoe0

netflow {

version 9

}

syslog-facility daemonAll counters for flows is zero.

sever@prim:~$ show flow-accounting flow-accounting for [eth0] Src Addr Dst Addr Sport Dport Proto Packets Bytes Flows Total entries: 0 Total flows : 0 Total pkts : 0 Total bytes : 0 flow-accounting for [eth1] Src Addr Dst Addr Sport Dport Proto Packets Bytes Flows Total entries: 0 Total flows : 0 Total pkts : 0 Total bytes : 0 flow-accounting for [pppoe0] Src Addr Dst Addr Sport Dport Proto Packets Bytes Flows Total entries: 0 Total flows : 0 Total pkts : 0 Total bytes : 0

The ppp1 interface was dynamically created for the internal client

sever@prim:~$ show pppoe-server sessions ifname | username | calling-sid | ip | type | comp | state | uptime --------+-------------+-------------------+----------------+-------+------+--------+---------- ppp1 | secondlogin | 55:55:00:99:55:55 | xxx.xxx.242.245 | pppoe | | active | 00:35:43

Show routes:

sever@prim:~$ show ip route

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route

K>* 0.0.0.0/0 [0/0] is directly connected, pppoe0, 00:42:50

C>* xxx.xx.242.17/32 is directly connected, pppoe0, 00:42:50

C>* xxx.xx.242.245/32 is directly connected, ppp1, 00:42:06